- Introduction to Kubernetes: The Orchestration Powerhouse

- Kubernetes Features

- Architecture of Kubernetes

- Kubernetes in DevOps: Enabling Automation with Efficiency

- Guardians of the Cluster: PKI and TLS/SSL in your Kubernetes

- Kubernetes Security Risks and Best Practices

- How Encryption Consulting Can Help

- Conclusion

Introduction to Kubernetes: The Orchestration Powerhouse

Kubernetes, an open-source tool initially developed by Google, is a powerful platform for container orchestration. Container orchestration refers to the automation of deploying, scaling, and managing containerized applications. Kubernetes simplifies this process by providing a robust framework to run distributed systems effectively, offering better control over application workloads. Its standout features include container autoscaling, load balancing, and self-healing, making it indispensable for modern infrastructure.

Let’s understand its utility with an example. Imagine a company running an ERP (enterprise resource planning) system that supports various departments like inventory, HR, and finance. As the company grows, these systems face increasing demand, requiring additional resources to stay responsive. Kubernetes steps in by dynamically scaling containers for each module, balancing resources across servers, and restarting failed services. In essence, it ensures that applications remain highly available and performant even under peak usage.

Kubernetes also performs tasks like health checks, which include monitoring the “liveliness” and “readiness” of applications to ensure they function as intended. Being maintained by the Cloud Native Computing Foundation (CNCF), Kubernetes can be deployed almost anywhere: on-premises, in the cloud, or even in hybrid environments.

What is Docker Swarm?

Docker Swarm is a native clustering and orchestration tool for Docker containers. It allows you to deploy and manage multiple containers across a group of machines (known as a swarm). Swarm simplifies container management by enabling developers to set up, scale, and maintain their applications without needing external tools. It provides features like:

- Ease of Use: Simple setup and straightforward configuration.

- Native Docker Integration: Seamlessly integrates with Docker CLI and ecosystem.

- Basic Scaling and Load Balancing: Scales containers and distributes traffic evenly across nodes.

However, Docker Swarm is primarily suited for smaller-scale applications or environments that don’t require advanced orchestration features.

How is Kubernetes Different from Docker Swarm?

While Docker Swarm is useful for simpler container orchestration, Kubernetes offers advanced capabilities better suited for large-scale, production-level applications:

- Multi-Node Clusters:

Kubernetes manages applications across clusters of nodes, ensuring high availability and scalability.

- Self-Healing:

Automatically restarts or replaces failing containers without manual intervention.

- Rolling Updates:

Gradually updates application versions without downtime for seamless transitions.

- Load Balancing:

Efficiently distributes network traffic across multiple containers to maintain stability.

- Extensive Ecosystem:

Supports integration with various cloud providers and tools, making it highly versatile.

- Robust Monitoring:

Provides detailed monitoring and logging for containers and workloads.

In comparison, Docker Swarm is simpler but lacks some of the advanced features that Kubernetes provides, making it less suitable for complex or distributed systems.

Kubernetes Features

-

Horizontal Scaling

Kubernetes enables horizontal scaling, which means adding more Pods to handle increased workloads. It can be done manually or automatically using the Horizontal Pod Autoscaler (HPA).

For example, when a new show is released and user traffic spikes, Kubernetes monitors metrics like CPU or memory usage to decide whether to add more Pods to maintain performance. By defining resource requests and limits, Kubernetes ensures efficient allocation and bin packing of resources, preventing overloading of any single server and optimizing overall usage. -

Self-Healing

If a container crashes or a Pod becomes unhealthy, Kubernetes automatically intervenes. The kubelet agent continuously monitors the health of containers using liveness and readiness probes.

- Liveness Probes: Check if the application inside a container is still running. If not, Kubernetes restarts the container.

- Readiness Probes: Verify if a container is ready to serve requests. If it is not ready, traffic is redirected to other healthy Pods.

-

Service Discovery and Load Balancing

Kubernetes simplifies how services discover each other and balances traffic across them. It uses DNS-based service discovery by assigning a DNS name to each service. Pods are assigned unique IPs, and Kubernetes ensures that traffic reaches the right destination automatically.

Load Balancing Mechanisms:

- ClusterIP: Exposes the service within the cluster, distributing traffic across Pods.

- NodePort: Allows external access by exposing a port on each Node in the cluster.

- LoadBalancer: Integrates with cloud provider load balancers to distribute external traffic efficiently.

-

Storage Orchestration

Kubernetes efficiently manages storage needs by supporting various backends, such as local disks, cloud storage, and network file systems.

- Persistent Volumes (PVs) and Persistent Volume Claims (PVCs): PVs are pre-provisioned storage resources in the cluster, while PVCs allow applications to request specific storage requirements dynamically.

- Dynamic Provisioning: Kubernetes automates storage allocation through Storage Classes, which define the backend type (e.g., SSD, HDD) for storage requests.

- Container Storage Interface (CSI): Kubernetes uses CSI to integrate third-party storage solutions like AWS EBS, Google Persistent Disk, or NFS, offering flexibility and extensibility for any storage provider.

-

Secret and Configuration Management

Kubernetes manages sensitive information, such as API keys, encryption keys, and user credentials, securely using Secrets. These Secrets are base64-encoded but not encrypted by default. Developers can use tools like Kubernetes Secrets Encryption Providers to encrypt them for added security.

For example, if an API key changes, Kubernetes updates the Secrets across the cluster without requiring developers to rebuild or redeploy containers. This ensures sensitive data remains secure and up to date. -

Automated Rollouts and Rollbacks

When deploying a new feature, Kubernetes rolls out updates gradually, monitoring stability using metrics like health probes, error rates, and application performance. If it detects any issues, Kubernetes instantly rolls back to the last stable version, ensuring minimal disruption to users. Let us look at what these metrics are.

- Health Probes: Kubernetes checks if containers are healthy with liveness probes (to restart containers) and readiness probes (to ensure readiness for traffic).

- Error Rates: It tracks the number of errors or failed requests to detect potential issues during updates.

- Application Performance: Kubernetes monitors response times, resource usage, and throughput to ensure the application performs as expected.

-

Automatic Bin Packing

Kubernetes optimizes resource usage by efficiently “packing” containers onto nodes based on their resource requirements. It allocates CPU and memory to each container, ensuring no single node is overloaded.

For example, when launching multiple streaming sessions, Kubernetes balances the load across available servers, maximizing resource usage while preventing performance degradation. -

Batch Execution

Kubernetes manages batch jobs using resources like Jobs and CronJobs:

- Jobs: Ensure that tasks, such as analytics or backups, are completed successfully.

- CronJobs: Schedule recurring tasks, like daily data processing.

Example YAML for a Batch Job:

apiVersion: batch/v1 kind: Job metadata: name: example-job spec: template: spec: containers: - name: batch-job image: busybox command: ["sh", "-c", "echo Hello Kubernetes! && sleep 30"] restartPolicy: OnFailure -

IPv4/IPv6 Dual Stack

Kubernetes supports dual-stack networking, allowing Pods and services to have both IPv4 and IPv6 addresses. This ensures compatibility with a wide range of user devices and networks.

For example, if users in different regions use either IPv4 or IPv6, Kubernetes assigns compatible Pod IPs and Service IPs to ensure everyone can connect without issues. This dual-stack implementation is especially useful for global applications with diverse user bases. -

Designed for extensibility

Kubernetes supports customization through its API and controller mechanisms. Developers can extend Kubernetes by implementing plugins for specific needs:

- Monitoring: You can integrate tools like Prometheus to collect application metrics and display them on dashboards. This is done through the Kubernetes API to manage and expose monitoring data.

- Storage: Using Container Storage Interface (CSI) plugins, Kubernetes can integrate third-party storage solutions like AWS EBS or NFS, enabling dynamic volume provisioning. For example, if your organization wants to monitor application health, you can deploy Prometheus using the API, and it will continuously collect metrics to provide valuable insights, ensuring Kubernetes adapts to your monitoring needs.

Architecture of Kubernetes

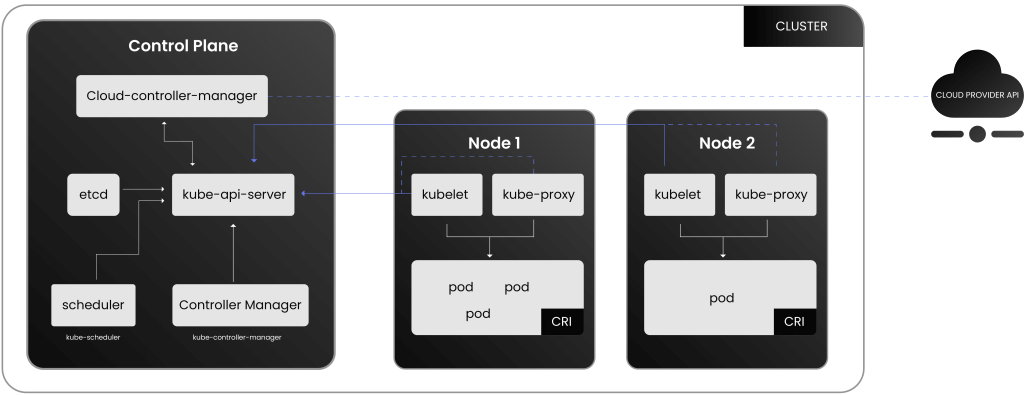

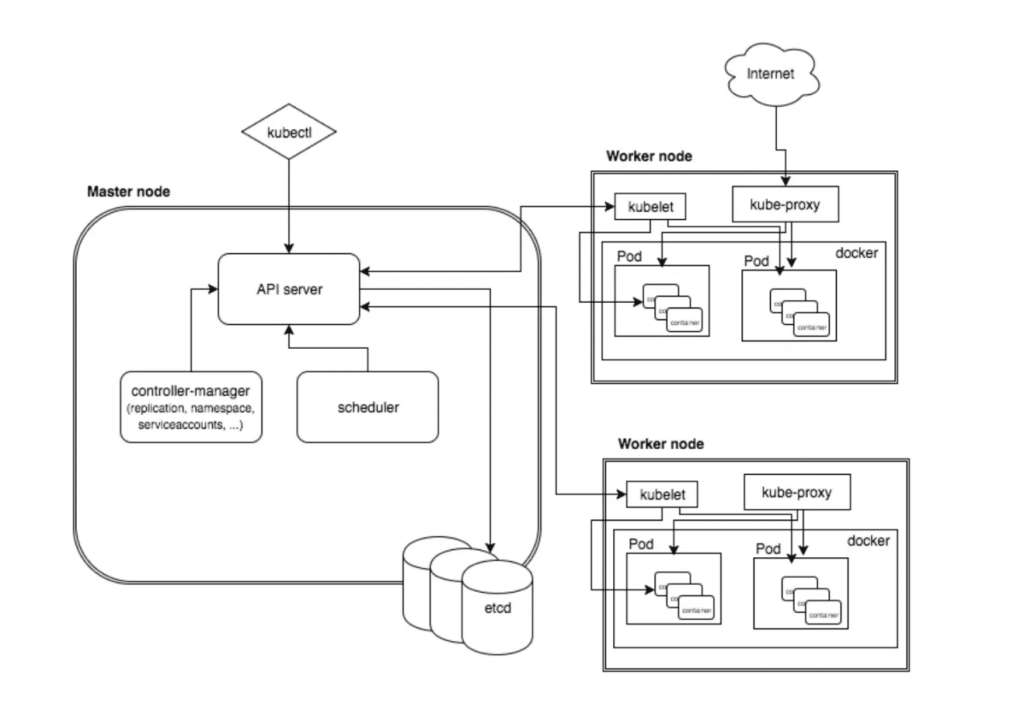

Kubernetes architecture is a set of machines (or virtual machines or cloud instances) that work together to manage, deploy, and orchestrate containerized applications. We can organize these machines into two main types: Control Plane (Master) and Worker Nodes.

-

Control Plane (Master Node): The control plane directs the worker to what and how to run the machines. Consider the control plane as the Main thread or the Project manager on a new product team. The Control Plane would instruct the worker nodes with specific tasks. The API Scheduler assigns the Pods in the Worker node as per the application’s needs. So, Control Plane manages the task assignment, and the resources needed to perform those tasks. It manages the cluster rather than executing anything on its own.

-

Worker Nodes: These machines (or virtual instances) are running machines for your containerized applications. Working on the above example only, Worker nodes would be the real developers and the operations team members. They are assigned each task and are required to perform that well. Each worker node in the Kubernetes cluster has its own individual Pod (like individual tasks), and similarly, kubelet is there to monitor that everything is going on as planned. If something fails, the failure must be reported directly to the Control Plane. Control Plane also has Kube proxy and container runtime, as discussed below. Remember, the worker node is the place where the real execution happens.

Now let us see what lies inside of them:

Control Plane Components

-

API Server: The API Server is the entry point for all requests to the Kubernetes cluster. It processes commands and interacts with other components to execute them.

- etcd: It is the persistent storage where Kubernetes stores its cluster state, configurations, and metadata.

When the API Server receives a request to change the cluster state (e.g., adding a new Pod), it updates the data in etcd to reflect that change. etcd acts as the source of truth, so if any component of the cluster needs to know the current state (such as which Pods are running or the status of deployments), it can query etcd.

This interaction ensures that the Kubernetes cluster remains consistent, even in the event of failures. If a control plane component restarts, it can always retrieve the latest cluster state from etcd to resume operations smoothly. -

Controller Manager: Ensures the cluster stays in its ideal state, fixing issues like node failures automatically.

Worker Nodes Components

-

Kubelet: The worker bee of Kubernetes, ensuring the pods on each node run correctly.

-

Container Runtime: Runs the containers with tools like Docker; they are responsible for loading images from the repository or isolating and even managing the resources for container use. They are also responsible for maintaining the container lifecycle.

-

Kube-proxy: Manages networking, ensuring smooth communication between services and pods.

Kubernetes Objects

| Objects | Description | Example Use Cases |

|---|---|---|

| Pods | Small units holding one or more containers that work together. | Running an instance of a web server, like Nginx or Apache, in a containerized environment. |

| Services | Give pods a consistent way to communicate. | Connecting a frontend web app to a backend API via a service. |

|

Volumes |

Provide persistent storage for data. | Providing durable storage for stateful applications like databases. |

| ConfigMaps/Secrets | Store config data and sensitive info securely. | Configuring environment variables for an application, like database connection strings or feature toggles. |

| ReplicaSets | Tied to Windows. Keeps the correct number of pods running. | Maintaining five replicas of a web server for high availability and load balancing. |

| Deployments | Handle updates and rollbacks for your pods. | Rolling out a new version of a shopping cart microservice with zero downtime. |

| DaemonSets | Ensure certain pods run on all nodes. | Running logging or monitoring agents like Fluentd or Prometheus Node Exporter on every cluster node. |

| StatefulSets | Manage stable identities for stateful apps. | Deploying a database like MongoDB or MySQL, where each instance requires its own persistent volume. |

| Jobs/CronJobs | Run tasks either once or on a schedule. | Running a database backup or processing analytics data at a scheduled time. |

Networking and Load Balancing

-

Cluster Networking: Pods get their own IPs without manual configuration.

-

Service Networking: Handles load balancing and traffic routing.

-

Ingress: In Kubernetes, it is a resource that manages external HTTP/HTTPS traffic and routes it to the appropriate services inside the cluster. It acts as a reverse proxy, allowing you to define how external requests should be forwarded to internal microservices based on the URL or host.

For example, in an e-commerce application, you can configure Ingress to direct traffic to different microservices like user authentication, product catalog, or order management based on the requested URL (e.g., www.example.com/login for authentication, www.example.com/products for the catalog).

Additionally, Ingress can manage SSL/TLS termination, ensuring that connections are secure by encrypting traffic between the user and the application. This provides a single, centralized point of entry for all external traffic, simplifying both traffic management and security enforcement.

Kubernetes in DevOps: Enabling Automation with Efficiency

Kubernetes and DevOps are such things you can always place them together. It’s crazy how Kubernetes can take care of repetitive tasks, like deploying and scaling apps, all on its own! It has features like self-healing, autoscaling, and rollback. Thus, you can always have your application up. This is Automation using Kubernetes.

It’s a shared platform where developers can containerize apps, and operations teams can manage them easily. Plus, with built-in logs and monitoring, everyone stays in the loop, promoting a continuous feedback cycle. Whether you’re deploying on a cloud or in a data center, Kubernetes offers a uniform interface. This consistency means less friction for teams, no matter where they work from. It proves that Kubernetes helps in Collaboration and provides consistency.

Kubernetes in CI/CD Pipelines

Kubernetes integrates with CI/CD tools like Jenkins, GitLab, CircleCI, and Travis CI to automate the deployment and scaling of applications. When using Jenkins, Kubernetes can scale resources based on the workload and deploy containerized applications automatically. If anything goes wrong, Kubernetes can roll back quickly to the last stable version to keep the system running smoothly.

Similarly, with GitLab, Kubernetes helps manage deployments, scaling, and configuration directly from the pipeline. Kubernetes ensures the infrastructure remains consistent across all environments and new containers are deployed without any disruption. CircleCI and Travis CI also work seamlessly with Kubernetes, automating the process of building, testing, and deploying applications. Kubernetes handles the orchestration and scaling of containers, ensuring applications are always up-to-date and reliable.

Kubernetes makes your infrastructure immutable, meaning once the application is containerized, it remains unchanged. Kubernetes takes care of updates by deploying new containers without affecting the system’s stability, ensuring fast, reliable deployments with minimal downtime.

Infrastructure as Code (IaC) with Kubernetes

Declarative Configuration: You describe the system’s desired state (how many pods, resources, etc.) in YAML or JSON files, and Kubernetes adjusts things automatically to match.

Version Control & GitOps: Kubernetes works with GitOps by keeping all configurations in Git. When you make changes in Git, tools like ArgoCD or Flux automatically apply them to the Kubernetes cluster. This ensures the cluster stays in sync with what’s in Git. If something goes wrong, you can easily roll back to the previous version. GitOps helps track changes, makes deployments faster, and keeps everything consistent.

Consistency Across Environments: Kubernetes gives you the benefit of defining infrastructure once and then you can use it across dev, staging, and production environments, ensuring consistency and fewer errors.

Key Use Cases for Kubernetes

Kubernetes simplifies scaling by automatically adjusting applications based on demand, ensuring smooth performance during traffic spikes. In high-performance computing, it handles complex tasks and optimizes resources, improving performance in fields like finance and research. For example, TensorFlow training jobs can scale across multiple nodes with Kubernetes, managing large datasets efficiently.

Kubernetes solves challenges in high-performance computing by optimizing resource allocation, job scheduling, and fault tolerance, reducing bottlenecks and downtime. In microservices management, Kubernetes ensures self-healing and auto-redeploy features. If a microservice fails, Kubernetes restarts it, keeping services available and reducing manual intervention. It also enables independent scaling of microservices, speeding up development cycles. Kubernetes automates development processes, helping move applications seamlessly between on-prem and cloud environments, supporting hybrid and multi-cloud flexibility.

Guardians of the Cluster: PKI and TLS/SSL in your Kubernetes

In Kubernetes, Public Key Infrastructure (PKI) and TLS/SSL certificates are very integrated and are crucial features. Let’s dive into why they are so important and where exactly they fit into the Kubernetes ecosystem.

Why do PKI and TLS/SSL Matter in Kubernetes?

Public Key Infrastructure (PKI) and TLS/SSL certificates work as the first line of defense of your cluster, ensuring that only trusted entities gain access. They encrypt communication, establish trust, and prevent unauthorized access thus, keeping your Kubernetes environment safe.

Steps to Implement PKI and TLS/SSL Certificates in Kubernetes

Step 1: Set Up a Certificate Authority (CA)

To begin, you need to set up a Certificate Authority (CA) that will sign your certificates. This can either be a self-signed CA or a trusted external CA. You will first generate a private key for the CA and then create a root certificate that is signed by the CA.

Step 2: Generate Server and Client Certificates

After setting up the CA, the next step is to create server and client certificates. Start by generating a private key for the server. Then, create a Certificate Signing Request (CSR) for the server and have it signed by your CA. If needed, you can also generate and sign a client certificate for secure client authentication.

Step 3: Store Certificates in Kubernetes Secrets

Once you have generated the server and client certificates, store them securely in Kubernetes Secrets. These secrets will hold the server’s private key and the signed certificate, allowing your Kubernetes services to use them for encrypted communication.

Step 4: Configure Kubernetes Services to Use TLS

Next, configure your Kubernetes services to use TLS certificates. This involves referencing the secrets created in the deployed YAML files for your services, ensuring they use the certificates for encrypted communication.

Step 5: Verify TLS Setup

Finally, verify that the TLS setup works correctly by testing the service’s connection. Use tools such as curl or OpenSSL to ensure that the service uses the certificates for secure, encrypted communication.

Securing Communication with TLS Certificates

Kubernetes relies on TLS (Transport Layer Security) to encrypt all communication between its components, such as nodes, pods, and services. K8s safeguards your cluster and data by using PKI and TLS Certificates. Whether it is traffic between the Kubernetes API server and the cluster’s components or communications between services, TLS certificates make sure everything stays encrypted and private. In a nutshell, TLS certificates are like a protective shield that keeps all communication within Kubernetes safe from eavesdroppers and attackers.

PKI is at the heart of trust in Kubernetes. It is the framework that manages digital certificates and cryptographic keys. In Kubernetes, PKI certificates serve as the digital ID cards for all the components, helping them verify each other’s identity. This trust is established between different entities, such as:

- Nodes and the API server

- Kubelets and the control plane

- Users accessing the cluster

- Services within the cluster

Without PKI, Kubernetes would not have a way to confirm that each piece of the system is who it says it is. Imagine trying to run a cluster where anyone could impersonate another service or user; chaos, right?

Where Do TLS/SSL Certificates Fit in Kubernetes?

In Kubernetes, TLS/SSL certificates are used in various critical areas:

- API Server: The API server is the brain of Kubernetes, and it needs TLS certificates to securely communicate with users and other components.

- Kubelet: Each node’s Kubelet, which is responsible for managing containers, uses TLS certificates to establish secure connections with the API server.

- Etcd: The etcd server, which stores cluster data, also uses TLS to ensure that all communications remain confidential.

- Services: Any service exposed to external traffic, or internally between pods, can be secured with TLS certificates to avoid data interception. Kubernetes automatically generates many of these certificates when using tools like Kubeadm, but you can also bring your own certificates if you want more control over the security.

Preventing Unauthorized Access

-

Authentication and Authorization: PKI and TLS certificates ensure only trusted users, services, or components can interact with Kubernetes resources, blocking unauthorized entities.

-

Client Certificates: These certificates verify the identity of entities interacting with the cluster, ensuring secure access and reducing risks of impersonation or unauthorized access.

-

Production Security: In high-stakes production environments, PKI and TLS certificates are critical in preventing breaches that could lead to data exposure or operational failure.

Challenges of Managing Certificates

-

Lifecycle Management: Keeping track of certificate renewals, expirations, and distribution across all Kubernetes components can be complex and error prone.

-

Cert-Manager Solution: Tools like cert-manager automate certificate issuance, renewal, and management, reducing human error and ensuring certificates are always up to date.

-

Simplifying Security: By automating certificate processes, CertSecure manager helps maintain consistent security throughout the cluster without the hassle of manual management. You can get a view of our CLM product right here.

Kubernetes Security Risks and Best Practices

Kubernetes is a robust container orchestration platform. However, there is always the risk of security, no matter how strong your platform is, right? So, now let us investigate the key attack vectors and risks and the best practices one must follow!

| Top Kubernetes Security Risks | Risk | Best Practice | Tools to Use |

|---|---|---|---|

| Misconfigured Cluster | Weak or default access controls can allow unauthorized users to manipulate the cluster. | Use strong authentication and authorization, regularly audit access, and apply robust network policies. | Kube-bench, OPA/Gatekeeper |

| Vulnerable Container Images | Using outdated or unverified container images can introduce malware or other security issues. | Only pull images from trusted repositories, perform regular vulnerability scans, and frequently update containers. | Trivy, Clair |

| Insider Threats | Compromised or malicious insiders can exploit their access to the cluster. | We shall use Role-Based Access Control (RBAC) to limit permissions and divide duties. Monitoring of activities should also be done. | Falco, Audit Logs |

| Denial-of-Service (DoS) Attacks | Attackers can exhaust the cluster’s resources, thus denial of service. | Use resource quotas and network protection mechanisms to limit the impact of DoS attacks. | Kube-proxy, Calico |

| Pod-to-Pod Communication | Insufficient network segmentation allows lateral movement across compromised pods. | Encrypt pod communication using TLS and apply network segmentation to isolate sensitive workloads. | Istio, Cilium |

| Insecure API Endpoints | API endpoints exposed to the public can be exploited by attackers to gain unauthorized access. | Secure your API endpoints with proper authentication and then restrict access, and don’t forget to regularly audit API traffic. | OAuth2 Proxy, Kubeaudit |

| Container Breakouts | Attackers exploiting vulnerabilities within containers can escape into the host system. | Apply strong isolation practices, update container runtimes, and harden the underlying host system. | gVisor, Kata Containers |

| Weak Secrets Management | Sensitive data stored in plain text or inadequately encrypted secrets can be exposed. | Use the Kubernetes Secrets API with strong encryption methods and enforce strict access control for secret data. | Vault, Sealed Secrets |

| Software Supply Chain Attacks | You’ve got a problem if you have compromised third-party dependencies or container images. They introduce backdoors and vulnerabilities into the cluster. | Implement strict control over the software supply chain, including verifying image signatures and using trusted sources for container images. | Cosign, Notary |

| Privilege Escalation | If roles are misconfigured, vulnerabilities will allow attackers to enhance their privileges inside the cluster. | You should apply the principle of least privilege and frequently review the access permissions. | Kube-bench, RBAC Manager |

How Encryption Consulting Can Help

Encryption Consulting secures Kubernetes clusters with tailored services like PKI implementation, certificate lifecycle management, and Secrets management. Our products, such as CodeSign Secure for secure code signing and Key Management solutions, ensure sensitive data is protected. We offer TLS setup, API security, and compliance audits, ensuring robust data security and Kubernetes resilience for on-premises or cloud deployments.

Conclusion

Kubernetes is a powerful tool that simplifies container orchestration, making it indispensable for modern DevOps and application management. From automating deployments to ensuring high availability, features of K8s are applied everywhere. With Kubernetes, you not only streamline your operations but also enhance security through integrated PKI and TLS support.

Want to dive deeper into the world of security, PKI, Cloud, Certificates, etc.? Be sure to explore more insightful blogs at the Encryption Consulting Education Center. Get customized training from our experts, and stay tuned for the latest trends and tips to elevate your tech game!

- Introduction to Kubernetes: The Orchestration Powerhouse

- Kubernetes Features

- Architecture of Kubernetes

- Kubernetes in DevOps: Enabling Automation with Efficiency

- Guardians of the Cluster: PKI and TLS/SSL in your Kubernetes

- Kubernetes Security Risks and Best Practices

- How Encryption Consulting Can Help

- Conclusion