- GenAI and the working of these complicated systems

- In what ways are threat actors exploiting GenAI?

- Phishing

- Deepfakes

- Creation of malware

- Data manipulation

- NIST recommendations and special publications

- Top strategies to protect you from GenAI threats

- A Recent report on risks posed by GenAI

- How can Encryption Consulting help?

- Conclusion

Every day, new GenAI solutions promise to improve consumer experiences, automate repetitive and strenuous jobs, promote creativity, and strengthen competitive advantages. But the rapid adoption of generative AI by enterprises is also increasing security risks. Although GenAI systems are powerful tools for your organization, their complex design increases the risk of cyberattacks on your company’s data, infrastructure, and outputs. You must stay watchful and implement good security measures to protect you and your organization from potential threats.

GenAI and the working of these complicated systems

A subset of artificial intelligence technology known as “generative AI,” or “GenAI,” generates new content from a dataset of prior examples. GenAI systems leverage extensive training datasets and user context (prompts) to create texts, images, audio, and videos. It uses sophistic algorithms and neural networks to replicate human creativity and generate original content.

At times, these datasets could be so large they could amount to the size of the entire internet. Hence, organizations would have to look for third parties to provide such models and capably run them on demand.

GenAI’s ability to handle vast amounts of data, respond to diverse queries, and continually learn makes it complex. When you integrate third-party APIs, the complexity grows as each service must work seamlessly together, and you must build a strong architecture for managing different protocols, error handling, and data formats. Load balancing and concurrency control become crucial when managing multiple data streams as real-time inputs, user interactions, and API responses are required.

At the same time, you need to prioritize data privacy, ethical behavior, and accuracy; all should be done while keeping your systems flexible enough to learn and adapt. It is important to be informed about emerging threats that could impact your systems, and that is what we will dive into next.

In what ways are threat actors exploiting GenAI?

Threat actors are using the advanced aspects of generative AI with bad motives, given how incredibly it can produce realistic text, graphics, and audio that are human-like in every sense. Such technology easily allows the attackers to enhance their attack vectors, how they evade detection, and, most importantly, how they execute and escalate their attacks. The situation is made worse because it is becoming difficult to differentiate between authentic and fabricated information, which has caused several alarm bells in many sectors.

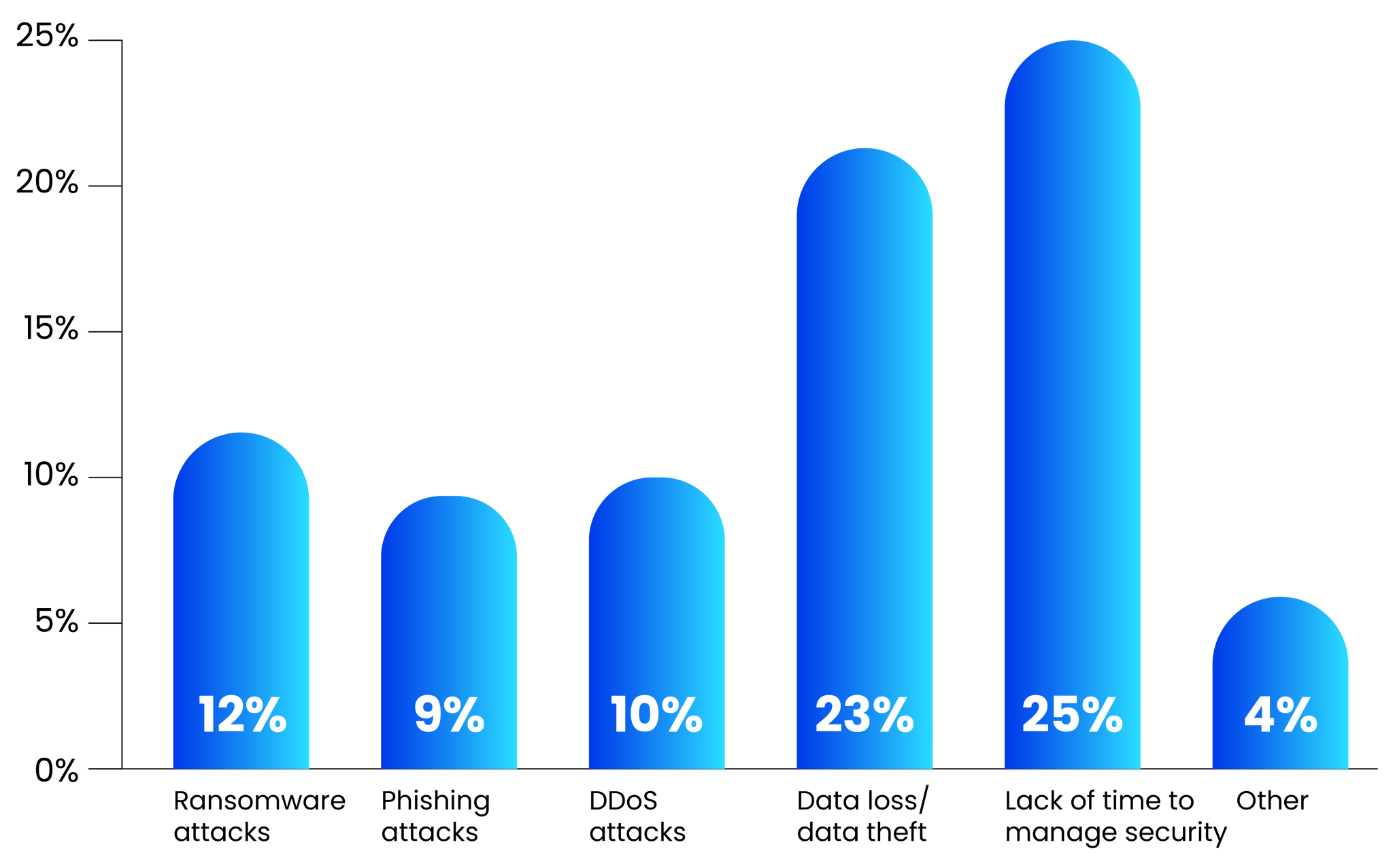

Threats like ransomware, phishing, DDoS, deepfakes, and data theft are on the rise. A report by DigitalOcean represents this trend and reveals that the biggest security concern for businesses is the lack of time to manage security (25%), followed by data loss or theft (23%), ransomware (12%), and DDoS attacks (10%). Understanding the adversaries’ actions is important as this knowledge helps formulate strong countermeasures and reduce a particular threat.

Phishing

Phishing comes under social engineering attack, in which attackers send fraudulent emails, instant messages, phone calls, or text messages to make them look like real ones. Threat actors can use GenAI to design fake emails that pull in the essence of real emails in content and deceive recipients by appearing authentic. This means that there is a possibility of you engaging in such scam emails. Thus, it is advisable for you to be careful.

Sensitive information like account details, email IDs, and personal information can all be accessed by cybercriminals. This often leads to identity infiltration or financial loss. Phishing threats may lead to hackers gaining entry into a business’s internal network, enabling them to exfiltrate information, deploy ransomware, or interrupt business operations.

Spear phishing, whaling, and pharming are targeted phishing techniques that exploit trust and technology. Spear phishing focuses on individuals using personal information to craft authentic-looking messages, which is the first step in breaching a company. Whaling targets high-profile executives like CEOs and aims to steal credentials and access sensitive data. Pharming redirects users to fraudulent websites by manipulating DNS servers or devices. It does not require any user interaction to compromise credentials or data.

Some notable examples of phishing cyberattack include the following cases.

-

This exemplary case of business email compromise (BEC) involves a Lithuanian man, Evaldas Rimasauskas. He stole over $100 million from Google and Facebook between the years 2013 and 2015. He impersonated a trusted supplier and sent fake invoices to the organization’s finance departments. The companies unknowingly paid the invoices.

-

The healthcare provider Elara Caring, based in the United States, was targeted by a phishing attack in 2020 with a successful compromise of two of its employees’ email accounts. The breach in such email accounts resulted in the sharing of private details of more than 100,000 elderly patients, including their names, dates of birth, financial and banking information, social security numbers, driver’s license information, and details about their health insurance. The attackers sustained their ill-motive activities for a week before noticing and controlling the intrusion.

One study also showed that in 2023, 71% of organizations in the United States were victims of an effective phishing attack at some point in the year. Phishing is placed at the top of the list of cybercrimes, as reported by the FBI. In 2023, they recorded close to 200,000 more instances of such attacks than they did four years earlier.

How to overcome a phishing attack?

It is possible to detect phishing scams by being observant and noting some clues associated with emails, texts, or websites. There are usually warning or even alarming statements intended to cause an immediate response, such as threats of shutting down your account after suspicious activity has been detected.

These emails may also come from an unknown source or even claim to be from a known organization, but instead of addressing you by your name, they use a generic term like ‘Dear Customer.’ Such requests for passwords or bank details bear a significant degree of risk since most, if not all, organizations do not use emails, messaging services, or text messages to obtain this type of sensitive information from their clients. In order not to be a target of fraud, avoidance of complacency and communication verification is an important practice.

If you receive a phishing email, you should take a moment and not take the interaction further. You should not click on any link or download/open any attachments or replies. You should look out for inconsistencies like the sender’s email address being suspicious or unreasonable requests regarding personal information. You must alert your IT personnel or use the phishing report option on your email service. However, delete the email from your inbox and trash it fully.

And in case you still interacted with the email, you should change your passwords at all accounts right away and watch out for anything suspicious at your accounts as well for safe borders. You should also advise your coworkers to stay alert and avoid such cyberattacks.

Deepfakes

A deep fake is a form of synthetic media where genAI is used to create highly realistic fake content, typically videos, images, or audio. Using generative adversarial networks (GANs) and sophisticated neural networks, threat actors can produce deepfake audio and video replicating an individual’s speech patterns, facial expressions, and voice with high fidelity. Such impersonation is increasingly employed in social engineering schemes, fraud, and spear-phishing attacks, often bypassing traditional verification mechanisms.

Deepfakes are being integrated into malware delivery systems. For instance, fake video calls or synthetic voices can trick users into downloading malicious payloads during what appears to be legitimate interactions.

To get a better idea about deepfakes, you should go through past incidents.

-

In 2019, when deepfake audio technology was not very advanced, a voice that mimicked the CEO of a UK-based energy company was employed to facilitate a transfer of $243,000 to an account controlled by imposters by one of the firm’s subsidiaries.

-

In early 2020, a bank manager in Hong Kong was tricked into transferring $35 million using deepfake voice technology. The fraudsters used a fake voice that sounded like the company director and sent fake emails to authorize the transfer for a fake acquisition. The scam involved at least 17 people, and the money was transferred into multiple accounts across the U.S.

How can you detect and overcome a deepfake attack?

There are some specific observations by which you can detect deepfakes. Common signs of deepfake videos include odd shadows on the face, unnatural skin tones, strange blinking patterns, unrealistic beards and hair, fake glare on glasses, and blurry edges around the face. In audio, you may notice mismatched lip-syncing or robotic voice sounds. To detect these manipulations, tools like deepware scanners or Microsoft’s video authenticators use machine learning to spot small inconsistencies, such as unusual facial movements, micro-expressions, and eye movements.

Cybersecurity is a must-have in such scenarios to overcome these challenging attacks, which have the capacity to put down the whole organization. You can adopt the following guidelines to safeguard yourself from deepfake attacks.

-

Deploy AI-powered tools to detect manipulated media.

-

Use encrypted communication platforms to protect sensitive data.

-

Implement digital watermarking to authenticate proprietary media.

-

Establish strict protocols for media verification and content moderation.

-

Require biometric verification for sensitive communications.

-

Monitor online platforms for potential misuse of company-related media.

-

Protect media and voice data with encryption and secure storage.

-

Develop a crisis management plan to address deepfake incidents.

-

Advocate for laws criminalizing malicious deepfake use and collaborate on industry standards.

-

Educate employees about deepfake risks and detection techniques.

Creation of malware

Malicious software (in short, malware) is any software intentionally designed to harm, exploit, or disrupt computers, networks, or devices. GenAI enables even inexperienced threat actors to generate malicious code by reducing the technical expertise needed to develop malware. Generative AI, through advanced models like transformers and neural networks, can automate the creation of sophisticated malware. These AI tools can produce polymorphic malware that frequently changes its structure and makes it harder to detect.

AI generated malware can leverage adversarial techniques to bypass conventional detection systems. By exploiting vulnerabilities in machine learning models used in security software, this malware can dynamically alter its behavior and avoid endpoint detection and response (EDR) systems, firewalls, and antivirus programs. AI can even assist in designing ransomware with advanced encryption techniques or generating scripts to exploit vulnerabilities. This misuse of generative AI poses significant cybersecurity challenges and requires advanced defenses to counteract these attacks.

Malware comes in many forms, each with its own way of causing harm.

-

A virus attaches itself to files, spreads when you open them, and damages your data.

-

Worms are sneaky and spread across networks without your help.

-

Then there are Trojans, which look like harmless software but deliver harmful surprises once installed.

-

Ransomware locks your files or systems and demands money to give them back.

-

Spyware secretly watches what you do and ends up stealing your personal information, like passwords.

-

Adware bombards you with annoying ads, sometimes leading you to dangerous sites.

-

In Rootkits, hackers take control of your computer and stay hidden.

-

Keyloggers record every key you press to steal sensitive details.

-

Botnets turn your device into part of a hacker’s network for attacks, and fileless malware works entirely in your system’s memory without leaving any trace behind.

Each type poses serious risks, so staying protected is a necessity. To understand how serious this attack is, you should go through the major incidents that have happened. Attacks like the Kaseya ransomware or the SolarWinds breach show how damaging cyberattacks can be to businesses, supply chains, and sensitive information.

-

The Kaseya attack happened in July 2021 when the REvil ransomware group took advantage of a flaw in Kaseya’s VSA software, which is used by IT service providers. By attacking the software’s update system, the attackers spread ransomware to around 1,500 businesses worldwide and demanded $70 million. This major event showed the serious risks and weaknesses in the supply chain.

-

The SolarWinds attack in 2020 was a major cybersecurity breach where hackers inserted malicious code into updates for SolarWinds’ Orion software. This affected around 18,000 organizations, including U.S. government agencies and big companies. The attackers used a vulnerability in the software to access sensitive data and systems. The attack highlighted the risks associated with compromised software updates. It also affected critical operations across both private and public sectors.

How to overcome malware attacks?

You need a multi-layered strategy to protect against malware while ensuring smooth business operations. First, regularly updating software and patching both applications and operating systems is essential. These updates fix vulnerabilities that malware could exploit. The Application of the principle of least privilege ensures that users only have the necessary access to their roles and limits the scope of potential attacks. Behavioral-based detection tools identify unusual activity, even when malware doesn’t match known signatures.

Organizations should also regularly test and audit their security systems with simulated attacks to identify vulnerabilities. Continuous endpoint monitoring with EDR technology can detect and respond to suspicious activity in real time by ensuring quick mitigation of threats. Application greylisting can be applied to endpoints to block unauthorized software from accessing the internet or modifying files.

Network segmentation is another approach that isolates critical systems from less sensitive ones to prevent the spread of malware if an attack occurs. Additionally, using cloud-based security solutions provides scalable protection. Automated backups ensure business continuity in case of an attack and allow for quick recovery.

Lastly, educating users on security best practices and common threats like phishing significantly reduces human error, which is often a primary vector for malware. By combining these strategies, you can effectively prevent malware attacks while maintaining productivity.

Data manipulation

AI systems can inherit biases like algorithmic bias, adversarial bias, and omission bias from training data, which can lead to skewed outcomes. These biases may result in harmful consequences, such as discrimination in applicant tracking systems, inaccurate healthcare diagnostics for patients, and biased predictive policing targeting marginalized communities. Generative AI can be leveraged to create convincing fake reviews, product testimonials, and other types of content, enabling malicious actors to manipulate public perception or damage brand reputation. By using advanced natural language generation (NLG) techniques, AI can produce authentic-sounding content that mimics human language, which is difficult to detect.

Large language models (LLMs) are used in applications like virtual assistants and chatbots and require vast training data, often sourced through web crawlers scraping websites. This data can typically be collected without your consent and may include your personally identifiable information (PII). Other AI systems providing personalized experiences may also gather personal data.

Usually, professional hackers are responsible for these attacks and manipulate records or data in the hope of making money. Some incidents even say that these attacks can be an insider threat by employees or ex-employees who know all the ins and outs of the company. Tesla Motors can be such an example where its former employee attacked the organization. More examples are mentioned below.

-

Tay chatbot by Microsoft was an AI chatbot designed to learn from interactions with users on Twitter. Attackers manipulated the data, and the bot began to upload offensive posts to its Twitter account. It caused Microsoft to shut down the service only 16 hours after its launch.

-

The Twitter Bitcoin Scam of 2020 involved hackers gaining control of high-profile Twitter accounts, including those of Elon Musk, Barack Obama, and other prominent figures. Once in control, they posted fraudulent tweets promoting a cryptocurrency scam and urged followers to send Bitcoin to a specific address with the promise of doubling their money. The scam led to financial losses for victims and raised serious concerns about the security of social media platforms. This incident significantly damaged trust in Twitter’s data integrity and account security mechanisms.

What should you do under a data manipulation attack?

Detecting a data manipulation attack requires careful monitoring and analysis of an organization’s systems. The first step for you is to keep an eye out for unusual activities, such as unexpected changes in data or spikes in access to sensitive information. Reviewing system logs can help identify unauthorized changes or suspicious behavior. Cross-checking data against other sources or historical records can reveal inconsistencies. Analyzing metadata can show signs of unauthorized edits or changes. You can monitor user behavior and network traffic, and it detects unusual activities, such as someone accessing data they normally wouldn’t.

If you find a data manipulation attack has occurred, then it is crucial to act quickly to minimize damage and prevent further breaches. First, the affected systems should be isolated to prevent the manipulation from spreading. A thorough investigation should follow to identify the source of the attack, like how the data was manipulated and what systems were compromised.

When the extent of the attack is determined, you should notify stakeholders, employees, customers, and relevant authorities to maintain transparency and trust. After that, restore your lost or altered data from secure backups, making sure the backups haven’t been tampered with. It is crucial to conduct a full security audit to identify any vulnerabilities that were exploited and patch them.

Implementing additional security measures, such as enhanced monitoring or updated authentication protocols, can help prevent future incidents. Finally, legal and regulatory obligations must be reviewed to ensure compliance with data protection laws and to mitigate potential legal repercussions. Throughout the process, communication with affected parties should be maintained to manage the impact on the organization’s reputation and trust.

Apart from these technology-centric attacks, there are some environmental concerns as well. It includes a significant impact on the environment through high water usage for cooling (5.4 million liters for GPT-3 training) and energy consumption, leading to carbon emissions exceeding 600,000 pounds per model.

NIST recommendations and special publications

NIST defines three key categories of threats to GenAI systems. These involve Integrity, Availability, and Privacy. These principles guide how organizations should manage and mitigate risks, including those associated with emerging technologies like GenAI.

-

Integrity focuses on ensuring the accuracy, consistency, and trustworthiness of data and systems. With GenAI, risks related to integrity include data manipulation. AI might generate false or misleading information, such as deepfakes/fake news and model manipulation. In such cases, AI models may be tampered with to produce biased or harmful outputs. Data poisoning is another significant risk to integrity, where malicious actors inject harmful data into the training process of an AI model. It leads AI models to produce incorrect or misleading outputs. An example of data poisoning could be introducing fake user interactions into a recommendation system to influence the model’s decisions.

-

Availability ensures that services and data are accessible when needed. In the context of GenAI, risks involve service disruptions caused by attacks like denial-of-service (DoS) or distributed denial-of-service (DDoS). When AI systems fail or become unavailable, they disrupt business operations and even bring them to a halt. A key component for improving the performance, quality, and availability of AI models is the Retrieval-Augmented Generation (RAG) layers. The RAG layer ensures resilience and uptime of critical tasks. Securing AI models and associated databases is important to ensure continuous operation and prevent disruptions in services.

-

Lastly, privacy focuses on protecting personally identifiable information (PII) and adhering to legal standards such as the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA). GenAI can pose privacy risks through data leakage, where sensitive information might be unintentionally exposed. AI generated content could inadvertently reveal private data. Moreover, prompt and system context extraction in GenAI involves the use of user prompts and system context to generate responses, which can sometimes lead to privacy breaches. This happens when AI models unintentionally pull from personal or confidential data embedded within prompts or previous interactions, which might not be consented to by the user.

The National Institute of Standards and Technology (NIST) provides several Special Publications (SPs) that can help organizations address and mitigate the risks associated with the use of Generative AI. These guidelines offer a structured approach to managing the challenges and concerns posed by GenAI technologies.

-

NIST SP 800-53 (Security and Privacy Controls for Information Systems and Organizations)

The primary objective of SP 800-53 is to provide a structured approach for managing cybersecurity and privacy risks by offering controls that address a wide range of threats. These controls are divided into 18 families, including areas such as access control, system and communications protection, incident response, and contingency planning.

-

NIST SP 800-37 (Risk Mitigation for Information Systems and Organizations)

The primary use of SP 800-37 is to guide organizations through the process of assessing, implementing, and continuously monitoring security controls to mitigate risks. It helps ensure that systems are secure, compliant with regulations, and resilient to threats throughout their lifecycle.

-

NIST AI 600-1 (NIST AI Risk Management Framework)

This framework specifically addresses the unique risks posed by Generative AI. It covers issues like data privacy, model bias, transparency, accountability, and ethical concerns, providing best practices for managing and mitigating these challenges.

Top strategies to protect you from GenAI threats

It is essential for individuals and organizations to adopt best practices to avoid these emerging risks. Here, we’ll explore key strategies to help you stay protected from the evolving threats posed by generative AI. It ensures that your data, privacy, and reputation remain secure in this modern world where data breaches and GenAI attacks are so common.

-

Implement Strong Input Validation

You should implement robust mechanisms so that the information being ingested into your system is secure and logical. By checking incoming information, it is possible to block inappropriate and destructive inputs capable of damaging your system.

-

Implement Encryption

Data encryption transforms sensitive information into unreadable code and ensures its security even if attackers gain access. By using encryption algorithms, only authorized users with the decryption key can access the original data and prevent misuse.

-

Sensitive Credentials

Safeguarding your credentials is vital. Use secure vaults for storage, regularly rotate keys, and enforce access controls to protect sensitive information like OAuth tokens and API keys from unauthorized access.

-

Invest in Redundancy and Failover Mechanisms

It simply refers to having a backup system that can work as a replacement when something goes out. This ensures that your services stay up and running, even if one part of your system is compromised.

-

Conduct Regular Stress Testing

You should design your systems by keeping the worst scenarios in your mind. Stress testing should always be conducted in anticipation of DoS attacks. By emulating abnormal traffic, you can find architectural weaknesses and properly structure the systems for high loads.

-

Integrate Code Signing with Privacy Audits

Code signing refers to attaching a digital signature to software or code to ensure its authenticity and verify its source. By using code signing tools such as CodeSign Secure, the integrity and ownership of the code can be protected. Additionally, conducting regular privacy audits helps ensure that user data remains secure and confidential.

-

Traditional DDoS Mitigations

You should use firewalls and special tools to catch bad traffic by looking for unusual patterns in your services. This helps prevent DDoS attacks and ensures real users can always use your services without any problems.

-

Adopt Data Minimization Techniques

Data minimization principles should be practiced, including providing only necessary information. One lowers his risk of exposure in the case of a breach by minimizing the amount of personal data he owns.

-

Implement Strong Access Controls

You must ensure that only authorized individuals can access sensitive data by using multi-factor authentication (MFA) and role-based access controls (RBAC). This limits access to critical data and reduces the risk of unauthorized manipulation.

A Recent report on risks posed by GenAI

Recently, a report was published by HP Wolf Security on September 24, 2024. New evidence has emerged that demonstrates how attackers are using artificial intelligence (AI) to create sophisticated malware. As the cybersecurity domain evolves, this new development highlights the growing role of AI in cybercrime and makes traditional defense strategies even more vulnerable.

Key findings from HP Wolf Security’s report

The report reveals several alarming tactics that threat actors are leveraging:

- AI Generated Malware Scripts

Attackers increasingly use AI tools to develop malicious scripts, automating the creation of more effective and harder-to-detect malware. By using AI, they can quickly generate variants of malware, bypassing traditional signature-based detection systems.

- Embedded Malware in Image Files

The report also points to an emerging trend where malware is embedded in image files. This method exploits how devices process images, allowing malware to go undetected by conventional security mechanisms.

-

Malvertising

Another method highlighted in the report is using malvertising, where attackers insert rogue PDF tools into ads. When unsuspecting users click on these ads, they unknowingly download malicious files, leading to system compromise.

How can Encryption Consulting help?

As GenAI-driven threats continue to evolve, Encryption Consulting supports organizations through its Encryption Advisory Services. Through a data discovery exercise, we locate both structured and unstructured sensitive data and provide actionable strategies for managing it securely. Our risk assessment process identifies vulnerabilities within your organization and offers tailored solutions to minimize the risk of data breaches. We also deliver in-depth assessment and strategy, create a clear roadmap, and establish data protection frameworks to strengthen security and make the company compliant.

Our Data Protection Program Development emphasizes governance, risk monitoring, and performance metrics to enhance overall security. Additionally, we support the integration and deployment of advanced technology solutions to defend against AI-driven cyber threats effectively. Encryption Consulting helps organizations avoid cyberattacks by combining strategic insights and technical expertise.

Conclusion

To summarize, the evolution of generative AI has its merits and threats, which no organization can afford to ignore. Attack vectors such as phishing, voice deepfakes, and the generation of malicious malware, including data abuse, have become a worrying trend as bad actors are weaponizing GenAI. This poses an escalation of danger to security, privacy, and trust. Organizations can counter such threats successfully by adopting strong best practices. These best practices include input checks, redundancy policies, and effective access limitations in conjunction with a forward-looking monitoring and auditing process.

Finding a middle ground between the availability of tools designed for efficient GenAI activities and implementing rigorous safeguards to ensure their secure and ethical use in a rapidly growing digital ecosystem is important.

- GenAI and the working of these complicated systems

- In what ways are threat actors exploiting GenAI?

- Phishing

- Deepfakes

- Creation of malware

- Data manipulation

- NIST recommendations and special publications

- Top strategies to protect you from GenAI threats

- A Recent report on risks posed by GenAI

- How can Encryption Consulting help?

- Conclusion